Do Data Scientists do Data Engineering?

Data Engineering is a common task for any Data Scientist. Sure, we rarely do the “real” Data Engineering, e.g. loading data from a business system into a relational database. Rather, we work with data that already is in a database, and sometimes (if we’re lucky) it’s put there by a Data Engineer. We usually transform this data into an analytical format from which we can derive aggregations, visualizations and statistical models. In the start of any Data Science project, these jobs may be simple and not always of any significant concern but as your Data Science products get used more and more the importance of your data will increase.

Therefore it might be a good idea for any Data Scientist to invest some time into data

validation. There are many frameworks for data validation in different

programming languages. But Data Scientists usually feel comfortable

using tools they know, such as R and Python. R is a popular functional

programming language for data analysis. However, even though data

analysis is what R is famous for, the language can do a lot more besides

statistics. In this post we’ll take a look at the R

package pointblank that have made data validation and data quality

controls easy to do in R. pointblank also produces pretty tables for your

data validation steps, and I love pretty things. But there’s more to the package

than being easy and pretty, it’s a serious tool for any Data Scientist who wants

to get control over their data.

Using Snowflake in R

Data can come in many formats, but for this blog post I’ll focus on

databases and especially the increasingly popular database Snowflake.

Once you have created an odbc-DSN for your database it’s usually straight

forward to connect to any database in R using the packages odbc and

DBI.

library(DBI)

library(odbc)

con <- dbConnect(odbc(), "example_database")

The database we use here is populated with a couple of million rows of

district heating data and is a part of a research project that Solita Sweden is assisting.

This means that the data cannot be processed in memory in R. Thankfully,

the arguably most popular data manipulation

package in R dplyr has a database backend that can translate R code

into SQL. This means that we don’t have to bother with more than one

data manipulation framework at a time. With that said, most Data

Scientists should know some SQL, because sometimes the translations

don’t work as expected. But in 95% of the cases you can write R code

instead of SQL, which usually saves me a lot of time.

For instance, if I want to calculate how many observations there are in a table and

group the result by a column I can use dplyr code on a database table:

library(tidyverse) ## dplyr is included in the package tidyverse

library(dbplyr)

metering_tbl <- tbl(con, in_schema("PUBLIC", "METERING_READINGS_TBL"))

## Use dplyr code on the database table

property_tbl <- metering_tbl %>%

group_by(PROPERTY, UNIT_OF_MEASURE) %>%

summarise(

n = n(), #count all records

mean_value = mean(VALUE),

sd_value = sd(VALUE)

)

property_tbl

## Warning: Missing values are always removed in SQL.

## Use `mean(x, na.rm = TRUE)` to silence this warning

## This warning is displayed only once per session.

## # Source: lazy query [?? x 5]

## # Database: Snowflake 4.40.1[filipw@Snowflake/xxx]

## # Groups: PROPERTY

## PROPERTY UNIT_OF_MEASURE n mean_value sd_value

## <chr> <chr> <dbl> <dbl> <dbl>

## 1 return_temperature c 219273351 37.5 3118.

## 2 flow_temperature c 223159867 66.2 52.4

## 3 energy MWh 116629833 6858. 768893.

## 4 flow m3 132111599 1837. 961871.

On the backend R sends SQL-code to Snowflake and returns the result. We can see the SQL code generated:

show_query(property_tbl)

## <SQL>

## SELECT "PROPERTY", "UNIT_OF_MEASURE", COUNT(*) AS "n", AVG("VALUE") AS "mean_value", STDDEV_SAMP("VALUE") AS "sd_value"

## FROM PUBLIC.METERING_READINGS_TBL

## GROUP BY "PROPERTY", "UNIT_OF_MEASURE"

When we’ve aggregated all these million rows we can import the data into R and do all the things R is great for: visualizations, statistical modeling and reporting.

But this post is not about that, rather we’ll use R for something it is not famous for, but really good at: data validation.

pointblank

The package pointblank is one of many data validation packages in R,

check out assertr, validate and dataMaid for other examples.

However, I’ve been extremely impressed by the work of the creator behind

pointblank Rich Iannone. The documentation of pointblank is really

something special. Furthermore, the time spent on tests

give us an indication of the ambition behind the package, there are more

than 3000 unit tests for this package.

Anyways, let’s get down to it. You can use pointblank on data in

memory (maybe data comes from a csv-file or a json-file), but since

pointblank leverages the dplyr SQL backend you can also use it on

database tables.

So let’s do that. We can start by taking a look at a database table in our database. This table holds hourly observations of District Heating consumption. Data is imported from a District Heating network on a daily basis. It comes in JSON but I have an R-script that transforms it into a table that can be used for statistical analysis. Since the original format is in JSON there is a risk that the format might change, so data validation of this table seems like a natural step.

tbl(con, in_schema("PUBLIC", "METERING_READINGS_TBL")) %>%

select(-SOURCE_INSTANCE_NAME)

## # Source: lazy query [?? x 6]

## # Database: Snowflake 4.40.1[filipw@Snowflake/xxx]

## IMPORT_TIMESTAMP TIMESTAMP METERING_POINT_… PROPERTY

## <dttm> <dttm> <chr> <chr>

## 1 2020-10-31 02:43:48 2020-07-04 12:00:00 cf767121-4f64-4… flow_te…

## 2 2020-10-31 02:43:48 2020-07-04 12:00:00 cf767121-4f64-4… return_…

## 3 2020-10-31 02:43:48 2020-07-04 13:00:00 cf767121-4f64-4… flow_te…

## 4 2020-10-31 02:43:48 2020-07-04 13:00:00 cf767121-4f64-4… return_…

## 5 2020-10-31 02:43:48 2020-07-04 14:00:00 cf767121-4f64-4… flow_te…

## 6 2020-10-31 02:43:48 2020-07-04 14:00:00 cf767121-4f64-4… return_…

## 7 2020-10-31 02:43:48 2020-07-04 15:00:00 cf767121-4f64-4… flow_te…

## 8 2020-10-31 02:43:48 2020-07-04 15:00:00 cf767121-4f64-4… return_…

## 9 2020-10-31 02:43:48 2020-07-04 16:00:00 cf767121-4f64-4… flow_te…

## 10 2020-10-31 02:43:48 2020-07-04 16:00:00 cf767121-4f64-4… return_…

## # … with more rows, and 2 more variables: UNIT_OF_MEASURE <chr>, VALUE <dbl>

pointblank usage

In pointblank we first create an agent that defines which table we

want to validate. Then we use a validation function (these can also be

tests and expectations) to do our validation, e.g. col_is_character().

Lastly we use the function interrogate() to do the actual validation.

So for this table we might be interested in checking the format of the columns:

library(pointblank)

agent <-

create_agent(

read_fn = ~tbl(con, in_schema("PUBLIC", "METERING_READINGS_TBL")),

tbl_name = "METERING_READINGS_TBL"

) %>%

col_is_character(vars(METERING_POINT_ID, PROPERTY, UNIT_OF_MEASURE)) %>%

col_is_numeric(vars(VALUE))%>%

col_is_posix(vars(IMPORT_TIMESTAMP, TIMESTAMP)) %>%

interrogate()

When the “interrogation” is complete we can print the agent and we’ll get a pretty table telling us what has happened.

agent

| Pointblank Validation | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| \[2020-12-04|16:02:03\] </p> METERING\_READINGS\_TBL | |||||||||||||

| STEP | COLUMNS | VALUES | TBL | EVAL | ⋅ ⋅ ⋅ | PASS | FAIL | W | S | N | EXT | ||

| 1 |

col\_is\_character()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

— |

— |

— |

— |

||

| 2 |

col\_is\_character()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

— |

— |

— |

— |

||

| 3 |

col\_is\_character()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

— |

— |

— |

— |

||

| 4 |

col\_is\_numeric()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

— |

— |

— |

— |

||

| 5 |

col\_is\_posix()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

— |

— |

— |

— |

||

| 6 |

col\_is\_posix()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

— |

— |

— |

— |

||

| 2020-12-04 16:02:10 CET1.9 s2020-12-04 16:02:12 CET | |||||||||||||

Defining actions

Another useful feature is to define actions with action_level() to

decide what should happen if a validation function fails. For instance,

we might want to check that all the dates in our table are within a

certain range, and if they’re not we’d like to either add a warning or

if the amount of fail exceeds a certain limit we’d like to stop.

Below I’ve added a validation function to make sure that all values in TIMESTAMP

are between two dates:

agent_warn <-

create_agent(

read_fn = ~tbl(con, in_schema("PUBLIC", "METERING_READINGS_TBL")),

tbl_name = "METERING_READINGS_TBL",

actions = action_levels(warn_at = 1000, stop_at = 200000)

) %>%

col_is_character(vars(METERING_POINT_ID, PROPERTY, UNIT_OF_MEASURE)) %>%

col_is_numeric(vars(VALUE))%>%

col_is_posix(vars(IMPORT_TIMESTAMP, TIMESTAMP)) %>%

col_vals_between(vars(TIMESTAMP), "2016-01-01", "2020-10-07 23:00:00") %>%

interrogate(extract_failed = FALSE)

agent_warn

| Pointblank Validation | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| \[2020-12-04|16:02:14\] </p> METERING\_READINGS\_TBLWARN 1,000 STOP 200,000 NOTIFY — | |||||||||||||

| STEP | COLUMNS | VALUES | TBL | EVAL | ⋅ ⋅ ⋅ | PASS | FAIL | W | S | N | EXT | ||

| 1 |

col\_is\_character()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

○ |

○ |

— |

— |

||

| 2 |

col\_is\_character()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

○ |

○ |

— |

— |

||

| 3 |

col\_is\_character()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

○ |

○ |

— |

— |

||

| 4 |

col\_is\_numeric()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

○ |

○ |

— |

— |

||

| 5 |

col\_is\_posix()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

○ |

○ |

— |

— |

||

| 6 |

col\_is\_posix()

|

— |

→ |

✓ |

1

|

11.00

|

00.00

|

○ |

○ |

— |

— |

||

| 7 |

col\_vals\_between()

|

|

→ |

✓ |

691M

|

691M0.99

|

183K0.01

|

● |

○ |

— |

— |

||

| 2020-12-04 16:02:20 CET4.2 s2020-12-04 16:02:24 CET | |||||||||||||

As you can see we have 183K observations that are not in the date range which will only cause a warning.

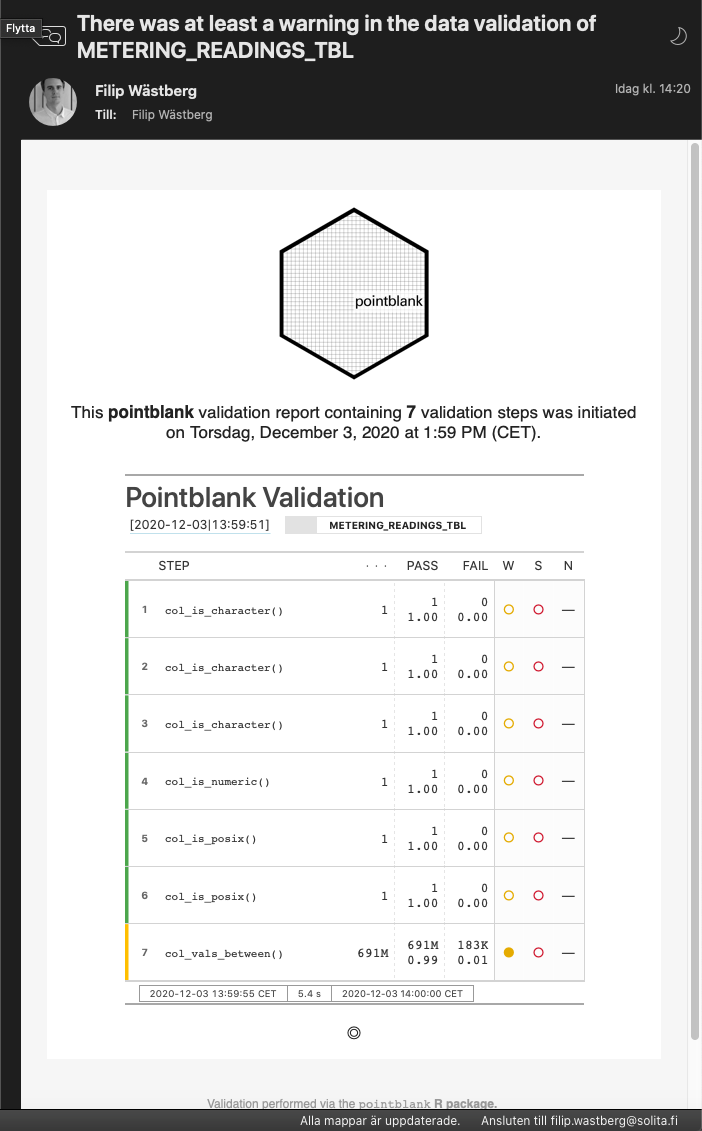

Creating notification emails

Many data validation tasks, at least for a Data Scientist, look

something like this: you want to check that the data behaves as you

expect, and if not you would like to get some kind of notification.

pointblank has great functionality for this and makes it easy to write

data validation reports that may be sent out by email through the

blastula package, which is also created by Rich Iannone.

To create a skeleton for an email we just use email_create() on the

agent.

Below I create a simple function that simply sends an email if the not all steps are passed.

library(blastula)

send_validation_email <- function(agent){

if(!isTRUE(all_passed(agent))){

email_agent <- email_create(agent)

table_name <- agent$tbl_name

subject <- glue::glue("There was at least a warning in the data validation of {table_name}")

email_agent %>%

smtp_send(

to = "filip.wastberg@solita.fi",

from = "filip.wastberg@solita.fi",

subject = subject,

credentials = creds_key(id = "outlook")

)

} else {

message("Everything passed, no need to send email.")

}

}

send_validation_email(agent_warn)

Which, if there are any warnings, result in this mail:

Of course, this is a simple example and can be further developed. This

can also be configured to be run with Docker or a Data Science platform

such as RStudio Connect,

which would be the recommendation if something like this is to be run in

“production”.

And it doesn’t end here. pointblank can generate YAML-files for you

and the validation functions are really flexible if your needs are more

specific.

Summary

R is often regarded as a niche programming language. And there is no

reason to sugar code it, R is a weird language. But today it is one of

the top ten most popular programming

languages (not bad for a niche

language), it has over 15 000 open-source libraries and is used for

everything from building machine learning models to advanced web

applications and, as we have showed here, data validation. Furthermore,

the development of R the last few years have had a lot of focus on

syntax and making it easy to use, an often overlooked part of

programming development, and this, I think, is one reason it continues

to attract more users. pointblank is one of those packages that makes R useful and a joy to use, it takes a

complex task and make it look easy through a carefully thought through API.

And last but not least, it is pretty. And we love pretty things.