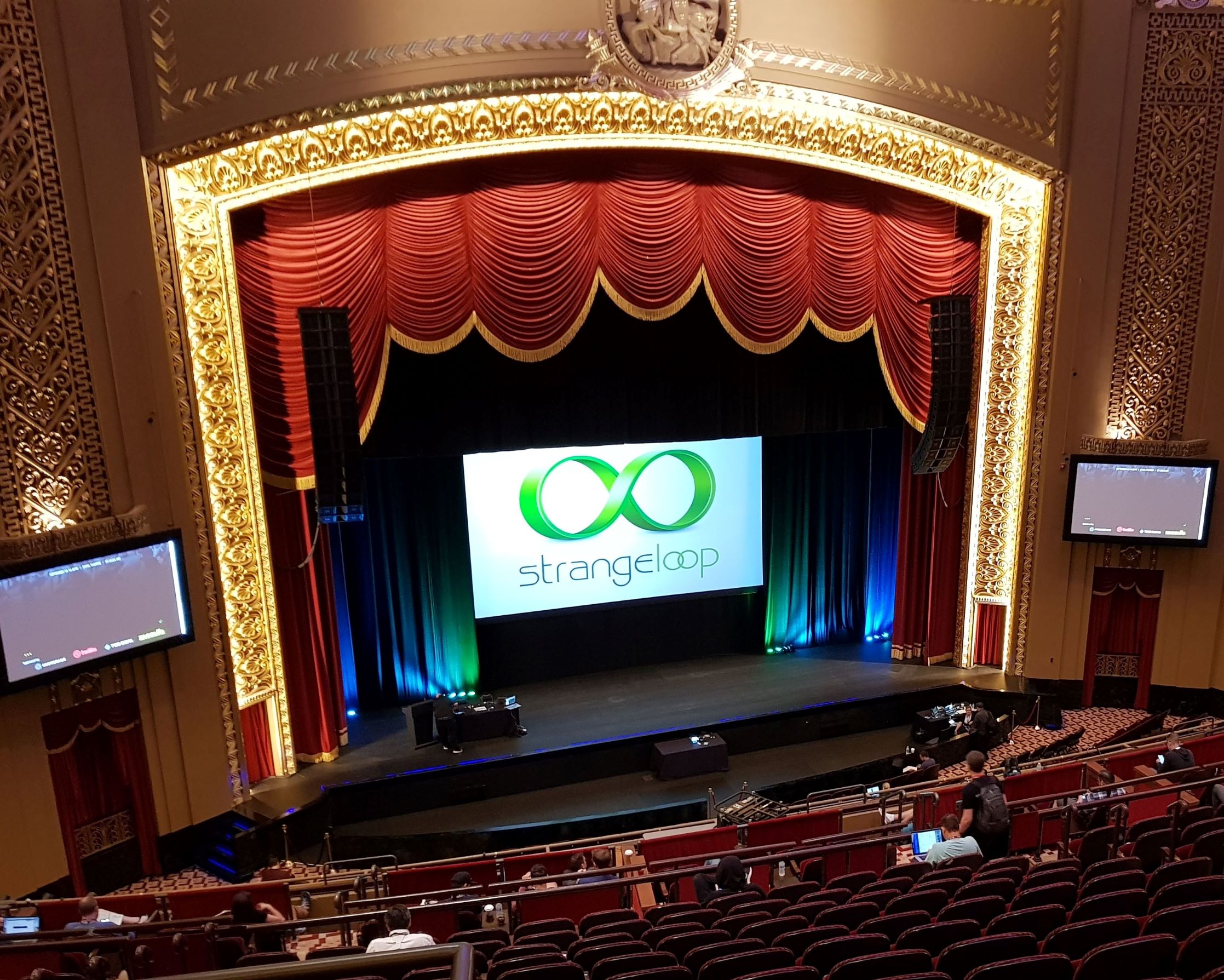

This year’s Strange Loop was held in St. Louis, Missouri on September 12 - 14, and I had a chance to attend. It was an interesting and a worthwhile experience, with many fascinating talks to attend and lots of interesting people to talk to. In total there were roughly 1750 conference goers in St. Louis.

There were eight tracks in the main conference this year, so seeing everything was clearly impossible. Thankfully videos of almost all the talks are available, and a lot were also liveblogged. Other coverage of the event can also be found in the official wiki.

With so many options available, I made my choices mostly based on how intriguing the talk seemed. Missing an essential tech talk would mean just watching a video later. I’m also not going to try recap every talk, but just a few I found most interesting.

elm-conf / day 0

The main conference was just two days, but there were several pre-conference options for the preceding day. Picking elm-conf was easy. I haven’t used Elm professionally and remain a bit skeptical about some aspects, but the goals of the language certainly resonate. The focus on simplicity, avoiding runtime errors and great developer experience in general are useful in any environment.

Thankfully elm-conf only had a single track, so there was no need for any hard choices straight away.

A Month of Accessible Elm, Brooke Angel

Accessibility seems an increasingly visible topic in software development these days. The tools and processes could still use a lot of improvement.

The talk (slides) started with creating and keeping habits, specifically about how to make good habits a part of a software development process. Picking something small is usually a good way to get started and it’s easier to keep the habit going as well.

The more technical parts of the talk included ways of how both types and tests can help with accessibility. A strict type system like Elm’s can make it very hard for a developer to produce inaccessible web sites – at least accidentally. Likewise, if your test library only allows clicking buttons, it’s going to correctly guide you to use those instead making other elements clickable.

Additional advice included making the test cases as close to the actual action as possible. This could mean avoiding technical selectors like CSS class names for example. Browser extensions like axe were also mentioned.

Game Development in Elm: Build your own tooling, James Gary

While Elm is a nice platform for building games, the lack of available tooling can be a problem. Building your own tooling is easy though.

Types are very helpful in many situations but can’t be used for everything. For example, a game with physics is going to have a lot of numerical values to tweak. Even with short compile times and live reload, the feedback loop can get unnecessarily long.

One way to make these adjustments more rapidly is to create a browser interface to tweak the variables. elm-config-ui is a tool to create these interfaces. A similar technique could be used for adjusting parameters outside game development as well.

elm-conf recap

Notably many of the elm-conf talks were about hobby projects and not “serious work”. Most talks were very interesting, but I wouldn’t have minded seeing some talks about larger scale projects and solutions to problems that tend to crop up in that scale.

Elm remains a small programming language at least for now, but the overall feeling at the conference seemed to be enthusiastic. Perhaps the killer application for Elm is around the corner.

Overall attending elm-conf was a great experience. Embracing and using existing web standards and tools seemed to be a popular approach. It was easy to pick up a lot of interesting ideas – and not just for Elm development.

Day 1

How to teach programming (and other things), Felienne Hermans

The opening keynote was a strong start to the main conference. There are certainly many misconceptions teaching and learning programming, and thinking about these is useful for any programmer, especially when working in a team.

The talk focused on exploration vs. explanation. Exploration is often touted as, if not the only, then at least as the only true way to learn programming. While exploring is valuable, explanation can help in many ways. For one, it can help avoid being overwhelmed with new concepts. Explaining a topic can overcome short term memory limits and thus help with the actual learning.

There’s also the way programming is talked about. While making it sound easy likely isn’t the way to go, saying “programming is hard” or “you’re going to fail again and again” aren’t a great fit for every situation. Likewise, calling something “not real programming” doesn’t seem inclusive. If Excel users don’t even realize they are programming, isn’t that a mark of a great programming tool, not a bad one?

After all, motivation doesn’t really produce skill, it’s closer to the other way around.

Typing the Untyped: Soundness in Gradual Type Systems, Ben Weissmann

Gradual type systems are becoming very popular in dynamically typed programming languages. This talk contrasted how the TypeScript type system has been built compared to some other dynamic languages.

The issues covered (slides) can be split in three categories: Errors that won’t be caught (soundness), valid code that won’t compile (completeness) and overly complicated types. These aspects are often at odds: Making a programming language more sound can make it less complete or more complicated to use.

Language history and how it’s used can determine what’s the reasonable level of soundness for a feature. Loops and array index access is very common at least in legacy JavaScript, so making array access fully sound in TypeScript would require sacrificing usability. In Ruby indices are used a lot less, so making index access sound makes more sense. In Python the precedent is clear: using an invalid index is an error anyway.

Often there is no optimal solution and even statically typed languages may not guarantee soundness of some types. If only immutable values are needed, the types are easier to define correctly as well.

Finding bugs without running or even looking at code, Jay Parlar

This talk was mostly about proof checkers but started with some general ideas about finding bugs by simply talking with various experts and confirming assumptions. This could lead to early (or late) documentation and even an unstructured document can be helpful in tracking errors in thinking and expectations.

With Alloy it’s possible to build structured models of both existing and new systems and then test the assumptions on how it works. It can be used to find security holes or more general logic issues in business models.

Alloy seemed powerful and easy enough to be used practically even in relatively simple projects. In more complex projects, using proof checkers to get some guarantees about the system seems like an easy win.

Uptime 15,364 days - The Computers of Voyager, Aaron Cummings

This talk was an exploration of how the computers of the Voyager probes are still working more than forty years after leaving Earth. This involves all kinds of redundancy from duplicated parts to using the very stable and proven technology where possible. Even so, Voyager included the first CMOS computer in space.

During the early design, the ionizing radiation around Jupiter hadn’t even been discovered. This was eventually adjusted for prior to launch, but many issues came up after launch as well. The most fascinating part of the talk was how some parts of the probes have been repurposed or reprogrammed on the fly to fill more tasks that have become more important.

With the distance of the probes to Earth continuously increasing, the available bandwidth is also consistently decreasing. There should still be enough bandwidth to send data back until the power sources start failing. That may happen in roughly five years. After that, both probes will continue silently as they’ve reached solar escape velocity.

ASTRIAGraph: Monitoring Global Traffic in Space!, Moriba Jah

The afternoon keynote unfortunately had to be conducted remotely, but thankfully there were only minor technical issues.

There are currently about 26 thousand tracked objects in space. These are all cellphone size or larger. Of these, only roughly 2 500 work. And it’s estimated that there are actually half a million objects total on orbit that could harm a satellite.

The amount of satellites is also going to increase rapidly with new low orbit communication satellite constellations being launched. This needs transparency and predictability to work. For example, both the orbit adjustment procedures and how the adjustments are communicated vary a lot currently. ASTRIAGraph already includes information from several government bodies, commercial providers and amateurs as well.

Just having a lot of detection data unfortunately isn’t enough. To track something and make useful predictions, uniquely identifying the object is also needed. Having a lot of data can be helpful here as well. Even information from something like tweets can be useful in building a model. If your data sources don’t agree where an object is exactly – and they generally don’t – bringing in even more data can help solve the problem with statistical means.

Day 2

Probabilistic Scripting for Automating Common-Sense Tasks, Alexander Lew

This talk consisted mainly of an example about using probabilistic programming to clean up a dirty data set. The primary aim is in creating tools for machine learning, but the idea could be applied in other programming contexts as well.

For small data sets, cleaning can be done by hand. For larger sets, some form of automation is needed. Even correcting basic typos, a simple computer program can however make mistakes. Neural networks and other machine learning techniques have also been found to produce both faulty data and models that are hard to maintain.

Probabilistic scripting aims to solve this with analysis instead of heuristics. This means creating scripts that may require domain knowledge. One example would be to use city population data to fill and fix values of a city field. Tools based on Gen can also use existing, correct values in the data to fix the dirty values. Going one step further, it’s also possible to learn which values are valid and which are dirty – just from the data.

Parser Parser Combinators for Program Transformation, Rijnard van Tonder

Code transformation using abstract syntax tree manipulation can get complicated even for simple changes. The concrete syntax and the AST can also diverge. On the other hand, regular expressions often aren’t expressive enough to make changes. Finally, making changes in many languages requires tools that understand all those languages.

Comby is a tool that allows changing code across many languages. This means a lot of parsers. The examples in the given in talk explain the process quite well. Besides code transformations, similar techniques could be used for syntax highlighting as well.

Performance Matters, Emery Berger

This talk tackled several important misconceptions about performance and performance testing: Unintended low-level effects, eyeball statistics and focusing on correct parts of asynchronous processes.

Often running a basic test isn’t directly going to tell you whether a change was positive. Even if the result is statistically significant, an algorithmic change can easily produce effects that are not directly caused by the optimization. Further changes to the code can then reverse the gains. Pretty much anything can affect performance to some level: memory layout, cache, the branch predictor and so on. Even the length of your username (an environment variable) can affect performance.

Stabilizer is a tool for rigorous performance testing that randomizes things like function addresses, heap allocations and stack frame sizes. Randomizing these makes the test environment unbiased and allows (more) reliable test results.

In modern software, focusing on the slowest or most frequently executed part of your program may not be important. If the overall flow is asynchronous, a slow part might not matter. In a web context a traditional profiler also won’t work for measuring overall performance. Coz profiler uses causal profiling to find the parts that would be most important to optimize for overall performance.

Conclusions

Strange Loop was a great conference to attend. The topics were varied, and while not everything was directly applicable to the day to day work of a developer, this helped with discovering many new ideas. Every talk I saw was also professional and clearly presented. If I had to recommend just one talk to watch, it would either be the more technical Performance Matters or the more general How to teach programming (and other things).

The overall buzz of the event was also very positive as anticipated. People were eager to talk not only about things they’d done or companies they represented, but also of other talks they’d seen or or ideas they’d gotten.

Besides the main talks, there was the official City Museum party, some unsessions and events like the Dark launch party. Highly recommended for any curious software developer!